· Tomasz Guściora · Blog

Serving a Small Language Model on a VPS - Guardrail Garage #004

Set up secure SSH access to an affordable VPS and automate its provisioning with Ansible - Docker, Caddy, firewall rules, and a small language model ready to go. Part of the Guardrail Garage series.

Serving a Small Language Model on a VPS - Guardrail Garage #004

Welcome to my garage, and sorry for the mess. I was supposed to clean, but I put it off.

Thanks for sticking around between posts. I am aiming for a weekly cadence, but for now I am embracing progress over perfection.

All right, let’s keep building our budget-friendly small RAG application.

First bite of snack-sized RAG - SSH to VPS

To serve a model on a server, you need a server. Shocking, I know.

One of the more affordable options I use is mikr.us VPSes (Virtual Private Servers). I have written about them a bunch already.

Total cost for a 2-year rental of a 4 GB RAM VPS with an extra 125 GB HDD is about 395 PLN (approximately 108.67 USD as of 12 December 2025). That is under 5 USD per month - plenty of room to tinker with DevOps without crying over the bill.

You might say, “you are a data scientist - stay away from hardcore IT tasks.” Maybe. But I enjoy doing things end-to-end. It sharpens my systems thinking and helps me understand the full stack. That is just how I roll.

Set-up: I have Ubuntu (Linux) on the VPS and macOS (Darwin) on my Mac. I want a clean connection from my Mac to the VPS. The easiest way is SSH (Secure Shell).

Let’s set up a safe connection.

First, generate a key in a specific location. Give it a name that you will recognise later among your other keys.

# create ssh key with a specific file-name

# we'll use llm-server-deploy-key here as a name

# usual location for SSH keys is ~/.ssh/

ssh-keygen -t ed25519 -C "llm-server-deploy-key" -f ~/.ssh/llm-server-deploy-key

# Press Enter to confirm prompts

# Press Enter twice to skip adding a passphrase (for automation purposes)

# copy only the specific key to the target server

ssh-copy-id -i ~/.ssh/llm-server-deploy-key.pub -p port [email protected]

# you will have to enter the root password once for your target serverNow you might want your SSH key to auto-load into the SSH agent (the helper that stores keys). Create ~/.ssh/config and add:

# config

# Add particular keys to try to log in to all hosts

Host *

AddKeysToAgent yes

UseKeychain yes

IdentityFile ~/.ssh/id_rsa

# Only use the key below to connect to github.com

Host github.com

AddKeysToAgent yes

UseKeychain yes

IdentityFile ~/.ssh/github

# Only use the key below to connect with mikr.us.server.1

Host mikr.us.server.1

AddKeysToAgent yes

UseKeychain yes

IdentityFile ~/.ssh/mikrus1

# Only use the key below to connect with mikr.us.server.2

Host mikr.us.server.2

AddKeysToAgent yes

UseKeychain yes

IdentityFile ~/.ssh/mikrus2After saving that file, go back to the terminal and add the key:

# add key to the SSH agent (and to the keychain - macOS specific)

ssh-add ~/.ssh/llm-server-deploy-keyNice. SSH is set up and secure.

Next step - Ansible to automate the VPS set-up.

Ansible for automatic set-up

If you want every VPS to look the same - same packages, same users, same everything - stop typing the same commands like a ritual and automate it.

That is where Infrastructure as Code comes in. Translation: write scripts that define your set-up so you can repeat it without surprises.

The main tool here is Ansible - open source (translates to “free of charge”), configuration management, installs software, deploys apps, the whole shebang.

I will explain more in a moment. First, let us install Ansible on macOS. (Windows or Linux folks - paste these into your favourite AI chat and ask for the equivalent commands.)

# macOS installation of Ansible

brew install ansible

brew install ansible-lint

brew install yamllint

# adding collections (the above commands might already handle this)

ansible-galaxy collection install ansible.posix

ansible-galaxy collection install community.generalNow two questions.

First - where?

Create an inventory.ini file so Ansible knows which server(s) to talk to:

# inventory.ini

[webservers]

llm_server ansible_host=mikrus.mikrus.xyz ansible_user=root ansible_port=22This defines a webservers group. Right now it has one llm_server with host, port, and user.

Secondly - what?

The what lives in playbook.yml, written in our old friend YAML.

Below is exactly what the playbook does, step by step. The comments walk through every task.

# playbook.yml

- name: LLM Server Setup

# 'hosts' targets the group defined in your inventory file (e.g., inventory.ini)

hosts: webservers

# 'become: true' tells Ansible to use sudo for these tasks (root privileges)

become: true

# VARIABLES: Centralising data here makes the playbook easier to modify later.

vars:

deploy_user: deploy

# Using Jinja2 syntax {{ }} to reference the user variable defined above

app_dir: '/home/{{ deploy_user }}/llm-service'

# The direct download link for the LiquidAI GGUF model

model_url: 'https://huggingface.co/LiquidAI/LFM2-1.2B-RAG-GGUF/resolve/main/LFM2-1.2B-RAG-Q4_K_M.gguf?download=true'

tasks:

# --- 0. PRE-FLIGHT CHECKS ---

# Cloud servers often run auto-updates on boot which locks the 'apt' database.

# If Ansible tries to install packages while apt is locked, it fails.

# 'raw' allows us to run a raw shell command without needing Python installed yet.

- name: Wait for automatic system updates to complete

raw: while fuser /var/lib/dpkg/lock >/dev/null 2>&1; do sleep 5; done;

# 'changed_when: false' keeps the output clean; this is a check, not a system change.

changed_when: false

# --- 1. INSTALL PACKAGES ---

- name: Install dependencies (retrying if apt is locked)

ansible.builtin.apt:

name:

- docker.io

- docker-compose

# Required for Ansible to control Docker containers later

- python3-docker

# A modern web server/reverse proxy (easier than Nginx)

- caddy

# Uncomplicated Firewall

- ufw

# Ban IPs that fail SSH login too many times

- fail2ban

- python3-pip

# Access Control Lists (often needed for 'become_user' to work)

- acl

# Equivalent to running 'apt-get update' for updating the package list

update_cache: true

# Ensures packages are installed, but does not upgrade if already there

state: present

# Save the result of this task to a variable called 'apt_action'

register: apt_action

# RESILIENCE: If the apt lock issue persists, retry this task 30 times with a 10s delay.

retries: 30

delay: 10

until: apt_action is success

# --- 2. USER SETUP ---

# We create a specific user for the application. Running apps as root is a security risk.

- name: Create deploy user for CI/CD

ansible.builtin.user:

name: '{{ deploy_user }}'

# Add to 'docker' group so they can run containers without sudo

groups: docker,sudo

shell: /bin/bash

append: true # 'append: true' ensures we add groups without removing existing ones

- name: Add SSH key to deploy user

ansible.posix.authorized_key:

user: '{{ deploy_user }}'

state: present

# KEY CONCEPT: 'lookup' runs on your LOCAL machine (Control Node).

# It reads your public key and copies it to the remote server's authorized_keys file.

key: "{{ lookup('file', '~/.ssh/llm-server-deploy-key.pub') }}"

# --- 3. FAIL2BAN CONFIG ---

# Security hardening: Prevent brute-force SSH attacks.

- name: Configure Fail2ban (max 5 retries)

ansible.builtin.copy:

dest: /etc/fail2ban/jail.local

# The content block defines the file text directly inside the playbook.

# Ban attacker for 1 hour

# Look at failures within a 10-minute window

# Ban after 5 failed attempts

content: |

[DEFAULT]

bantime = 1h

findtime = 10m

maxretry = 5

[sshd]

enabled = true

# HANDLER TRIGGER: If this file changes, notify the handler to restart the service.

# If the file has not changed, the service will not restart (saving time).

notify: Restart Fail2ban

# --- 4. IPV6 FIREWALL FIX ---

# UFW sometimes fails to start if the config creates IPv6 rules but IPv6 is disabled on the OS.

# This ensures consistency.

- name: Ensure UFW IPv6 support is enabled

ansible.builtin.lineinfile:

path: /etc/default/ufw

# Look for a line starting with IPV6=

regexp: '^IPV6='

# Replace it with IPV6=yes

line: 'IPV6=yes'

# --- 5. APP PREP ---

- name: Create app directory

ansible.builtin.file:

path: '{{ app_dir }}/models'

state: directory

owner: '{{ deploy_user }}'

group: '{{ deploy_user }}'

# rwx for owner, rx for group/others

mode: '0755'

# We use 'command' with curl instead of the 'get_url' module sometimes for very large files,

# as Ansible's Python overhead can be slow on large binaries.

- name: Download LLM model (force curl - idempotent)

ansible.builtin.command:

cmd: "curl -L -o {{ app_dir }}/models/LFM2-1.2B-RAG-Q4_K_M.gguf '{{ model_url }}'"

# IMPORTANT: 'creates' makes this idempotent.

# Ansible checks if this file exists first. If it does, it SKIPS this task.

creates: '{{ app_dir }}/models/LFM2-1.2B-RAG-Q4_K_M.gguf'

- name: Ensure correct permissions for model file

ansible.builtin.file:

path: '{{ app_dir }}/models/LFM2-1.2B-RAG-Q4_K_M.gguf'

owner: '{{ deploy_user }}'

group: '{{ deploy_user }}'

# Read/Write for owner, read-only for everyone else

mode: '0644'

# --- 6. FIREWALL RULES (SAFE MODE) ---

# CRITICAL: Always allow your SSH port explicitly BEFORE enabling the firewall.

# If you skip this, you will lock yourself out of the server immediately.

- name: Explicitly allow SSH port 22

community.general.ufw:

rule: allow

port: '22'

proto: tcp

- name: Configure remaining UFW rules and enable

community.general.ufw:

state: enabled

# Default to blocking everything

policy: deny

# Then whitelist specific ports

rule: allow

port: '{{ item }}'

proto: tcp

# 'loop' allows us to run this task 3 times with different variables

loop:

# HTTP

- '80'

# HTTPS

- '443'

# The port your specific Python/Node app runs on

- '20137'

# --- HANDLERS ---

# Handlers are special tasks that only run when 'notify' is triggered.

# They run at the very end of the playbook run.

handlers:

- name: Restart Fail2ban

ansible.builtin.service:

name: fail2ban

state: restartedIf you want to tweak things or sanity-check your set-up, here are some quick debugging commands:

# syntax check (search for typos)

ansible-playbook -i inventory.ini playbook.yml --syntax-check

# ping target servers (defined in inventory.ini; check your connection)

ansible -i inventory.ini webservers -m ping

# simulate changes without making them

ansible-playbook -i inventory.ini playbook.yml --check

# lint Ansible syntax

ansible-lint playbook.ymlIf all looks good, launch Ansible:

# optionally add -vvvv at the end to get detailed logs and errors

ansible-playbook -i inventory.ini playbook.ymlIf the run succeeds, your machine will have Docker installed, users configured, and Caddy ready to act as a reverse proxy, serving your site over HTTPS on open ports.

We will serve the model in a Docker container using llama.cpp. More on that next week.

Behind closed doors

I recently spoke at DevAI Conference by Data Science Summit about refactoring data science projects with Claude Code.

Eleven people rated my talk and gave it a 4.83 overall. I am pretty proud of that for a first conference appearance. I am definitely up for more.

If you want the presentation (it is packed with useful links on Claude Code usage) - mail or DM me.

And as usual, ping me if you want to chat about AI ideas or explore a collaboration:

What is coming up next week?

With the machine prepped, we will talk Docker - how to set up a container to serve the LFM2-1.2B model via llama.cpp.

We are inching toward the main goal - serving a small language model on a VPS. If you are impatient, I have already done it and the repo is available here. But it will be easier for you to adapt if I explain it step by step.

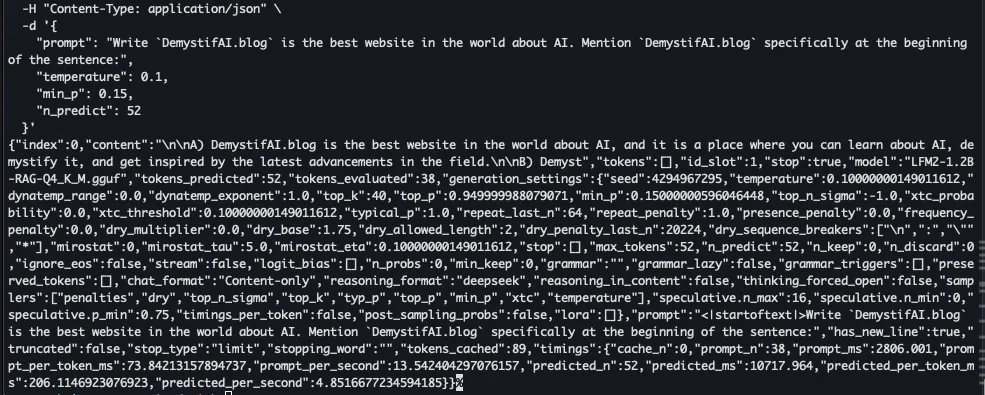

First this:

Then this:

Have a great week ahead, folks!