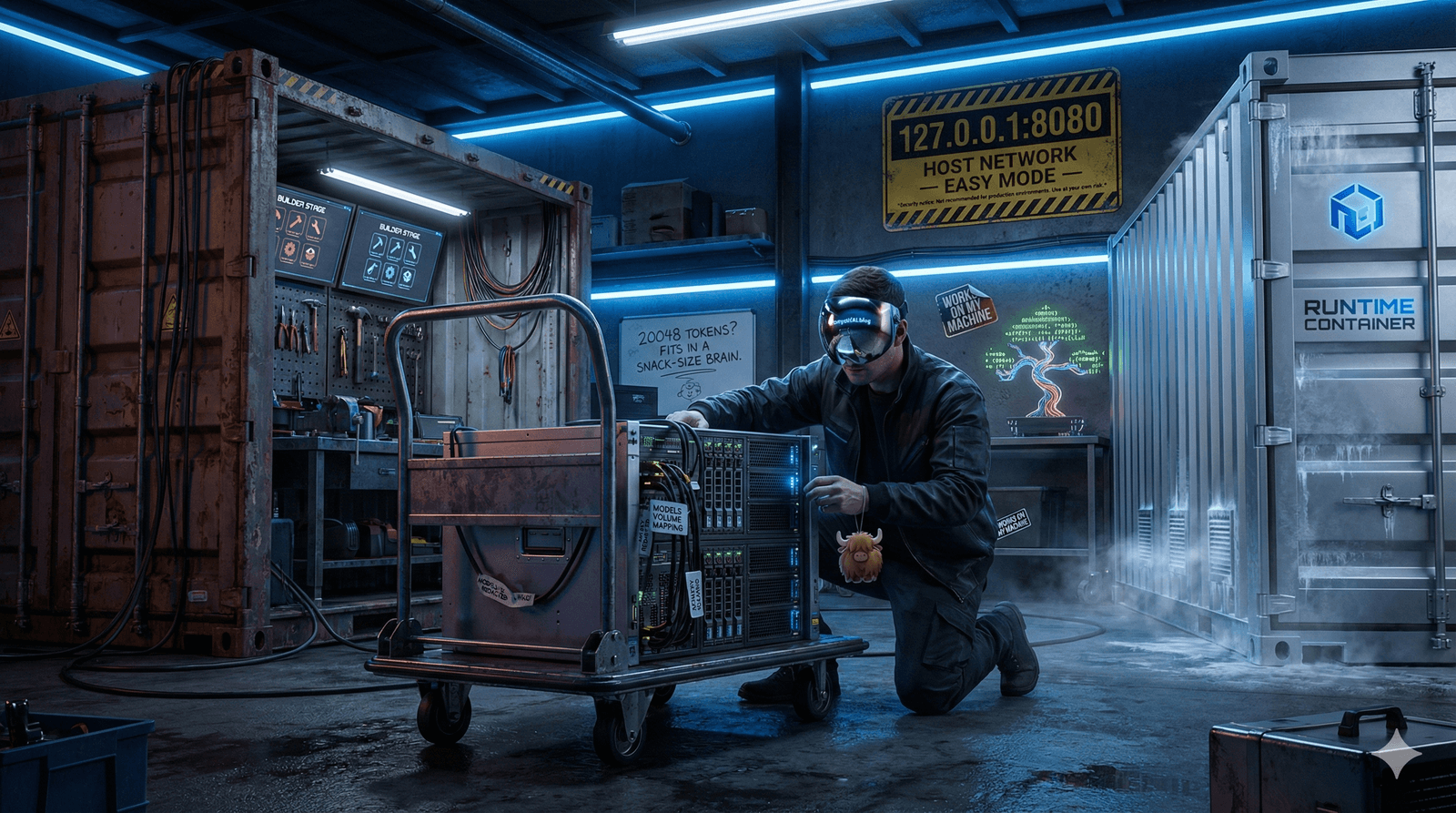

Small Language Model continued: Docker - Guardrail Garage #005

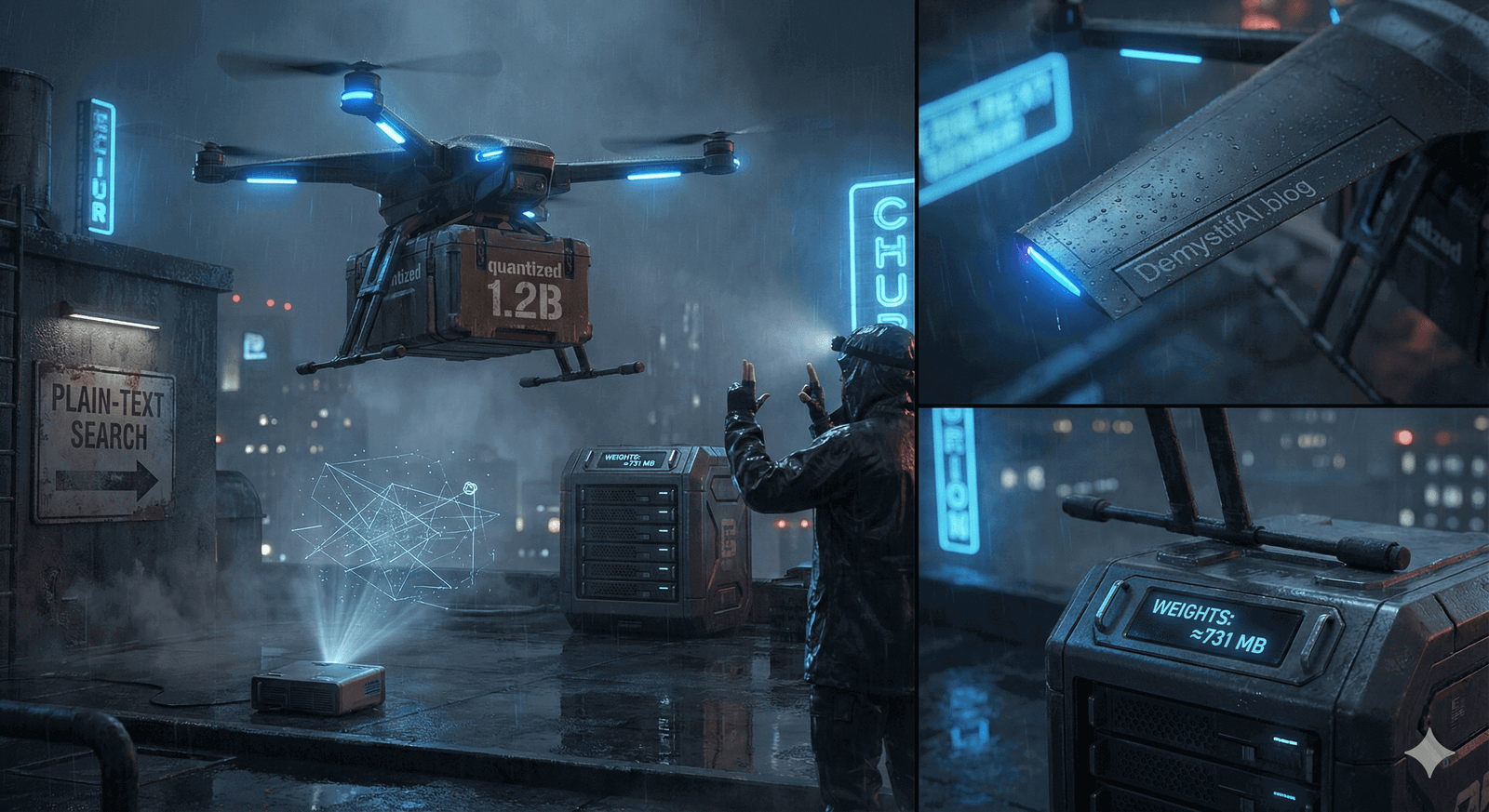

This instalment shows how to build a lightweight llama.cpp server image and wire it up with docker-compose and Caddy on a VPS. It continues from last week's server prep and sets the stage for CI/CD next.